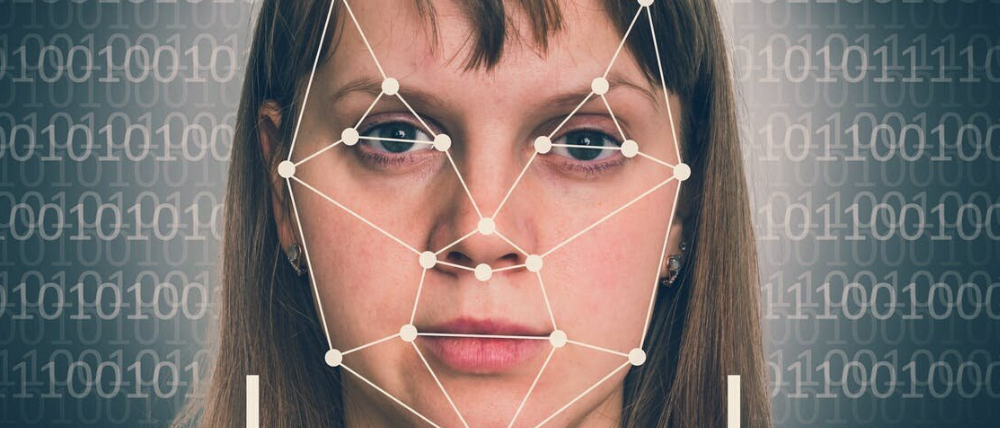

Identifying AI Imposters as Deepfake Tech Becomes Commonplace

Jeff Wolverton

The Challenge of Realistic AI

“The realism of generative AI poses a significant challenge,” Carl Froggett remarked. CIO of Deep Instinct a firm specializing in AI cybersecurity.

The development and refinement of AI tools for video and audio are advancing rapidly. For example, OpenAI introduced a video generation tool called Sora and an audio tool named Voice Engine that can convincingly replicate a person’s voice from a short audio clip. OpenAI has rolled out Voice Engine to a limited user base due to potential risks.

Froggett highlighted his British accent and regional speech nuances as something AI could mimic using publicly available data, such as recorded speeches, to create highly realistic audio and video impersonations.

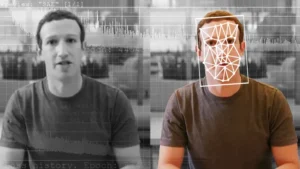

Notable Cases of AI Imposter Misuse

There have been notable instances of deepfake misuse, such as a reported case in Hong Kong where a company employee was tricked into transferring $25 million to a fraudulent account after a Zoom call that featured deepfaked images of her colleagues. This case exemplifies the potential future threats of deepfake technology.

Despite restrictions on access to advanced audio and video tools, illegal markets for similar technologies are burgeoning. “The criminals are just beginning to explore these tools,” Froggett noted.

Detecting AI Imposters: Practical Methods

It’s possible to create a perfect deepfake from just a short voice clip, according to Rupal Hollenbeck, president of Check Point Software. The affordability and accessibility of deepfake tools are increasing, which Hollenbeck believes will change the landscape of cybercrime.

Simple Techniques to Identify AI Imposters

Companies are now adopting various measures to guard against deepfake attacks, which could serve as guidelines for individual precaution in the era of generative AI. One simple method to identify a video imposter is to request physical actions during a call, such as turning the head, which current AI cannot realistically replicate, Hollenbeck suggested.

However, the efficacy of these methods may be short-lived as AI technology continues to evolve, according to Chris Pierson, CEO of Blackcloak. Pierson advises traditional authenticity checks like “proof of life” during video calls and the use of coded communications for added security.

Nirupam Roy, an assistant professor at the University of Maryland, noted the growing use of deepfakes for harmful purposes beyond financial scams, such as damaging reputations. Roy’s team has developed TalkLock, a system that helps verify the authenticity of audiovisual content by embedding QR codes.

Living Securely in the Age of AI Imposters

Organizations are advised to implement multi-factor authentication strategies to mitigate the risks of deepfakes, according to Eyal Benishti, CEO of Ironscales. Such strategies include segregation of duties and requiring multiple approvals for sensitive actions to prevent fraud from successful social engineering attacks.

The emergence of deepfakes underscores the importance of diligence and caution, as the old adage “seeing is believing” becomes less reliable. Slowing down and carefully considering each decision can be an effective defense against the rush tactics often employed by fraudsters, as emphasized by Pierson.

Start a conversation with us at PivIT Strategy to full understand this threat, and steps you can take to protect yourself.

Jeff Wolverton

Jeff, the CEO of PivIT Strategy, brings over 30 years of IT and cybersecurity experience to the company. He began his career as a programmer and worked his way up to the role of CIO at a Fortune 500 company before founding PivIT Strategy.